William J. Stewart Introduction to the Numerical Solution of Markov Chains by William J. Stewart, Hardcover | Indigo Chapters | Square One

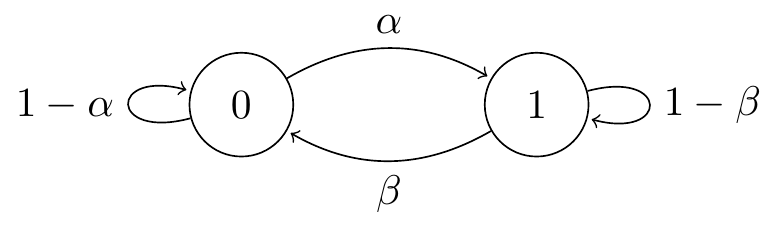

Markov chain converges to the same steady state for different initial probability vectors. - Mathematics Stack Exchange

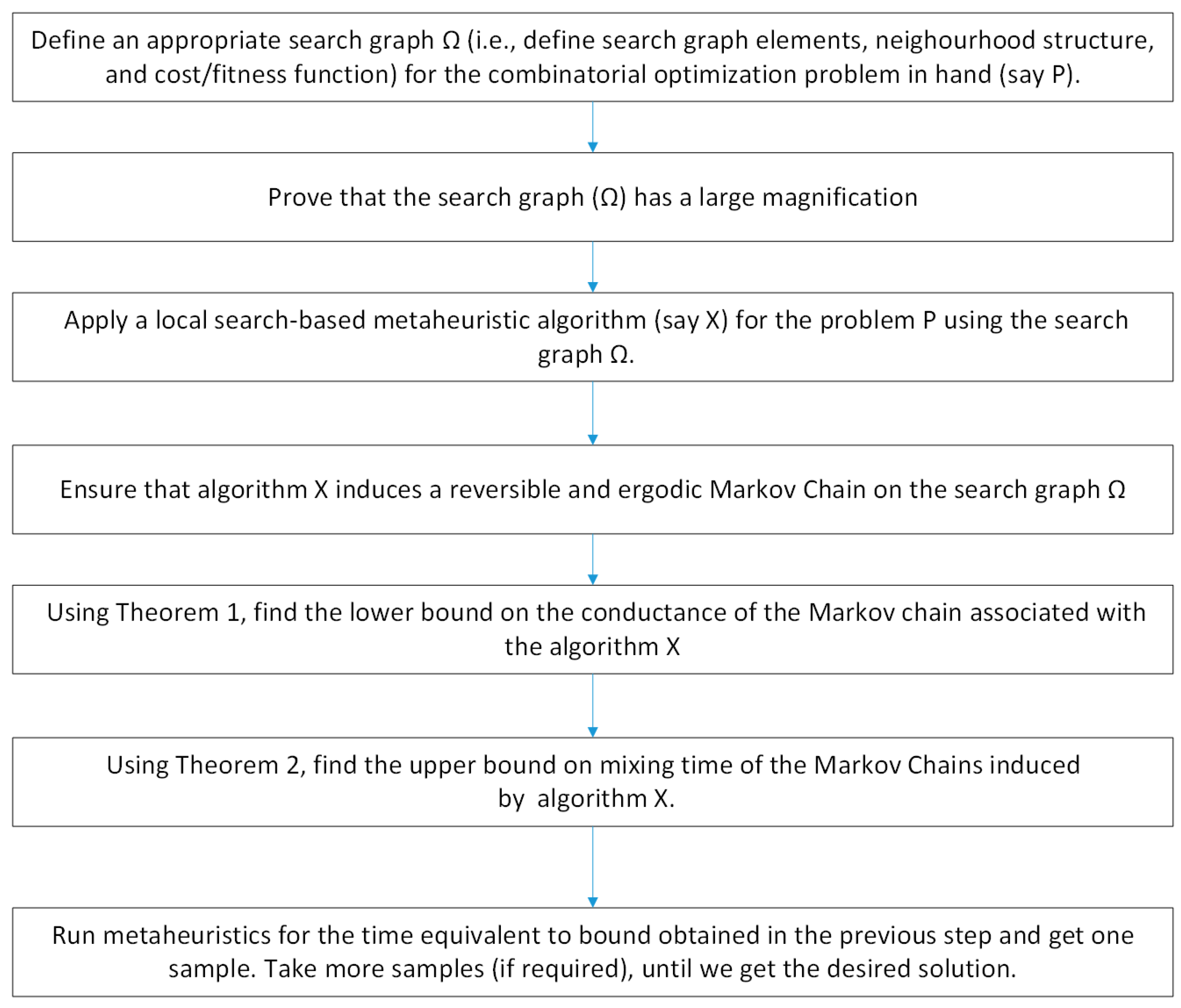

Mathematics | Free Full-Text | Search Graph Magnification in Rapid Mixing of Markov Chains Associated with the Local Search-Based Metaheuristics

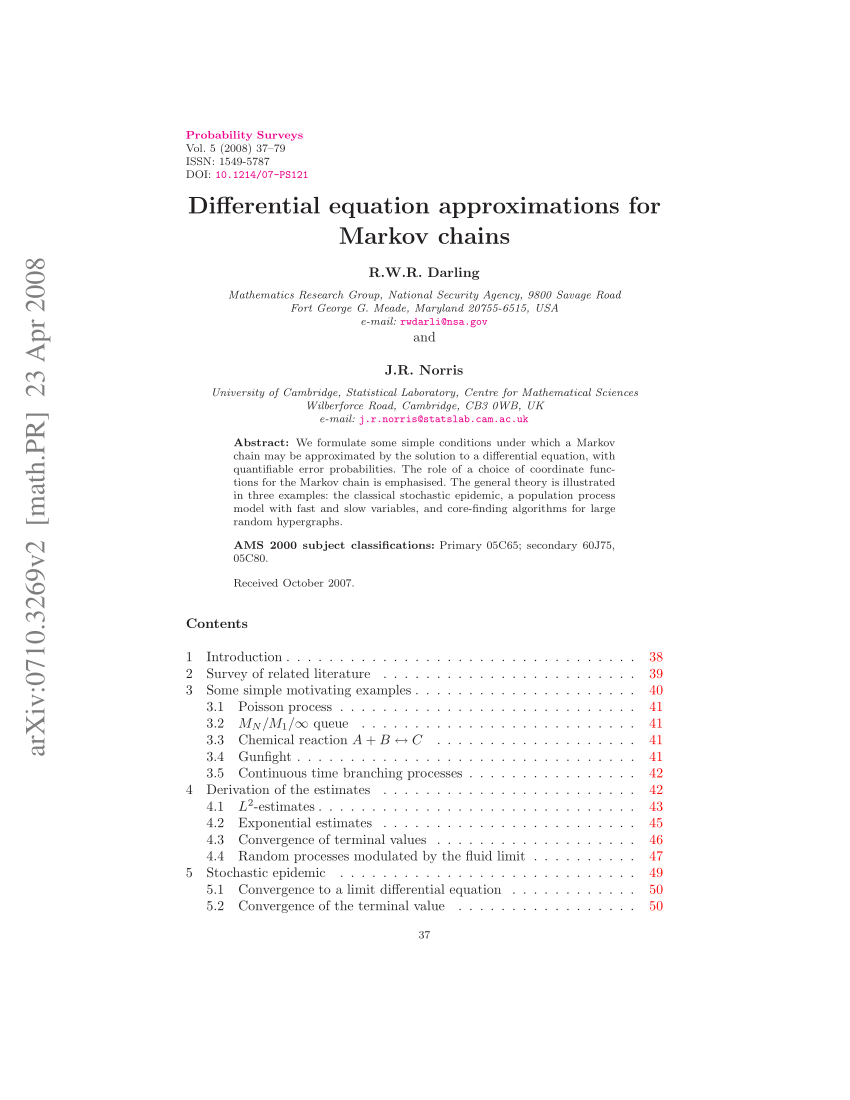

![PDF] Differential equation approximations for Markov chains | Semantic Scholar PDF] Differential equation approximations for Markov chains | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/5157a72bd6a1781f6a3f7cb1dd3f033674f4c99d/25-Figure3-1.png)